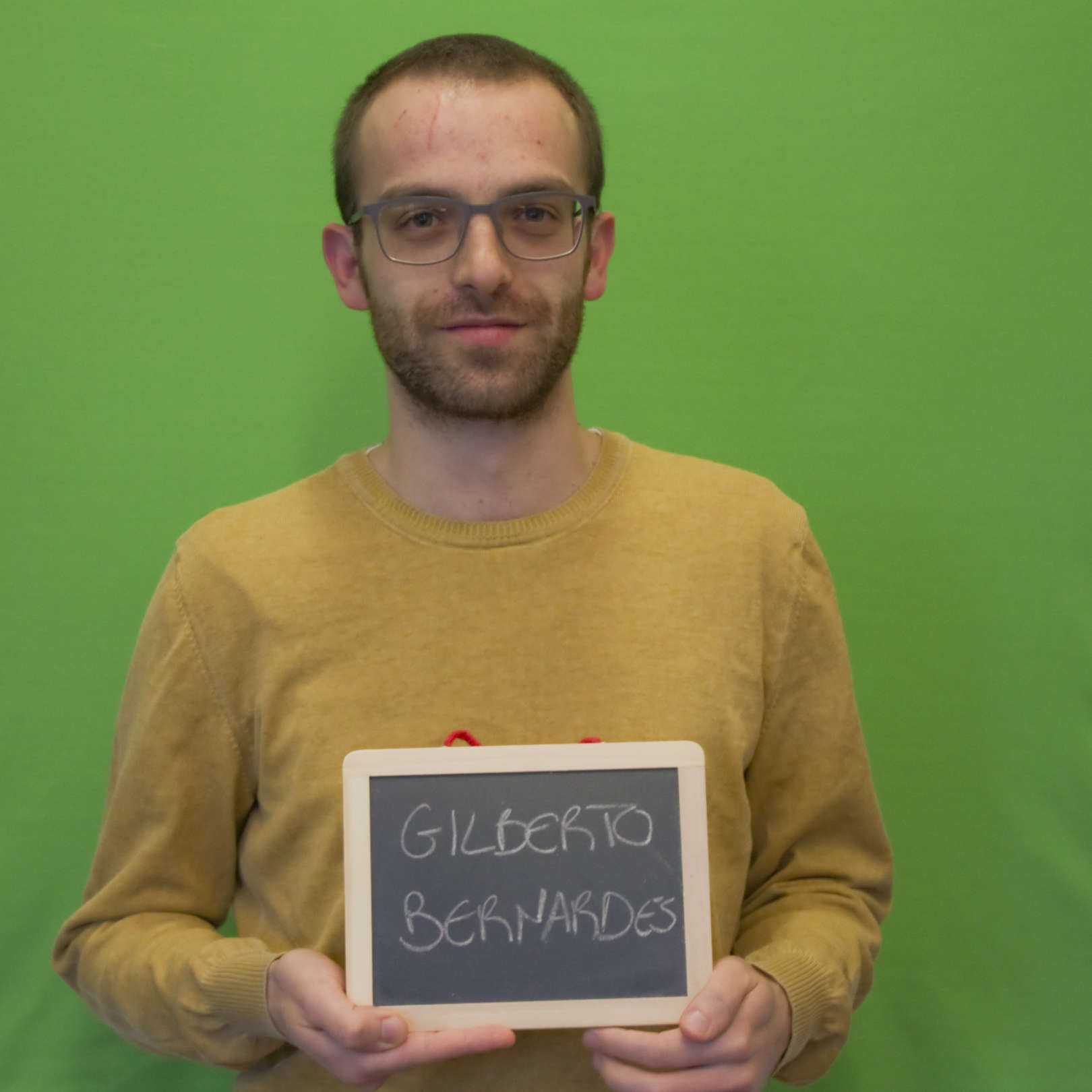

About

Gilberto Bernardes holds a Ph.D. in Digital Media (2014) by the Universidade do Porto under the auspices of the University of Texas at Austin and a Master of Music 'cum Lauda' (2008) by Amsterdamse Hogeschool voor de Kunsten. Bernardes is currently an Assistant Professor at the Universidade do Porto and a Senior Researcher at the INESC TEC where he leads the Sound and Music Computing Lab. He counts with more than 90 publications, of which 14 are articles in peer-reviewed journals with a high impact factor (mostly Q1 and Q2 in Scimago) and fourteen chapters in books. Bernardes interacted with 152 international collaborators in co-authoring scientific papers. Bernardes has been continuously contributing to the training of junior scientists, as he is currently supervising six Ph.D. thesis and concluded 40+ Master dissertations.

He received nine awards, including the Fraunhofer Portugal Prize for the best Ph.D. thesis and several best paper awards at conferences (e.g., DCE and CMMR). He has participated in 12 R&D projects as a senior and junior researcher. In the past eight years, following his PhD defense, Bernardes was able to attract competitive funding to conduct a post-doctoral project funded by FCT and an exploratory grant for a market-based R&D prototype. Currently, he is leading the Portuguese team (Work Package leader) at INESC TEC on the Horizon Europe project EU-DIGIFOLK, and the Erasmus+ project Open Minds. His latest contribution focuses on cognitive-inspired tonal music representations and sound synthesis In his artistic activities, Bernardes has performed in some distinguished music venues such as Bimhuis, Concertgebouw, Casa da Música, Berklee College of Music, New York University, and Seoul Computer Music Festival.