About

Jaime S. Cardoso holds a Licenciatura (5-year degree) in Electrical and Computer Engineering in 1999, an MSc in Mathematical Engineering in 2005 and a Ph.D. in Computer Vision in 2006, all from the University of Porto.

Cardoso is an Associate Professor with Habilitation at the Faculty of Engineering of the University of Porto (FEUP), where he has been teaching Machine Learning and Computer Vision in Doctoral Programs and multiple courses for the graduate studies. Cardoso is currently a Senior Researcher of the ‘Information Processing and Pattern Recognition’ Area in the Telecommunications and Multimedia Unit of INESC TEC. He is also Senior Member of IEEE and co-founder of ClusterMedia Labs, an IT company developing automatic solutions for semantic audio-visual analysis.

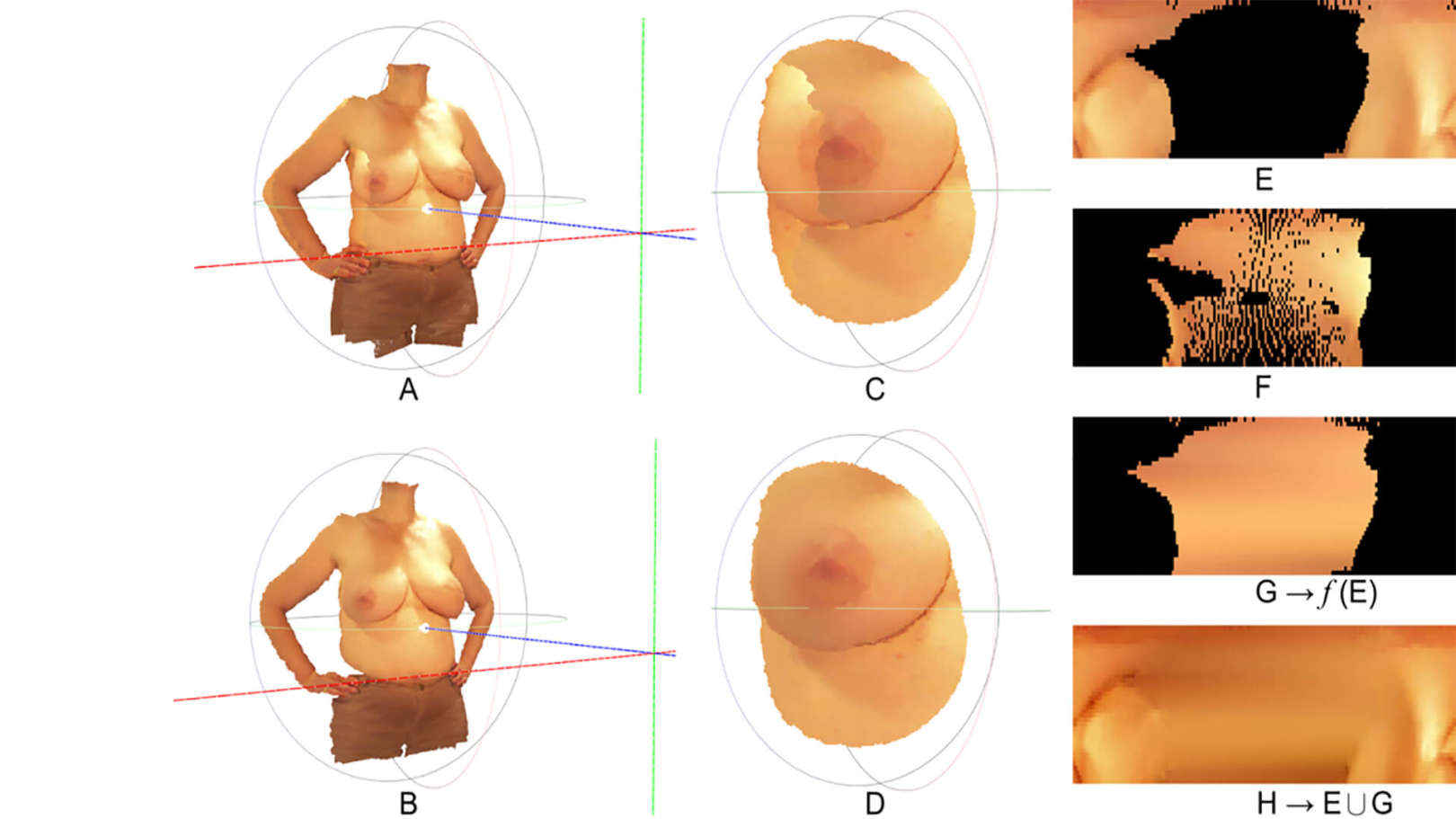

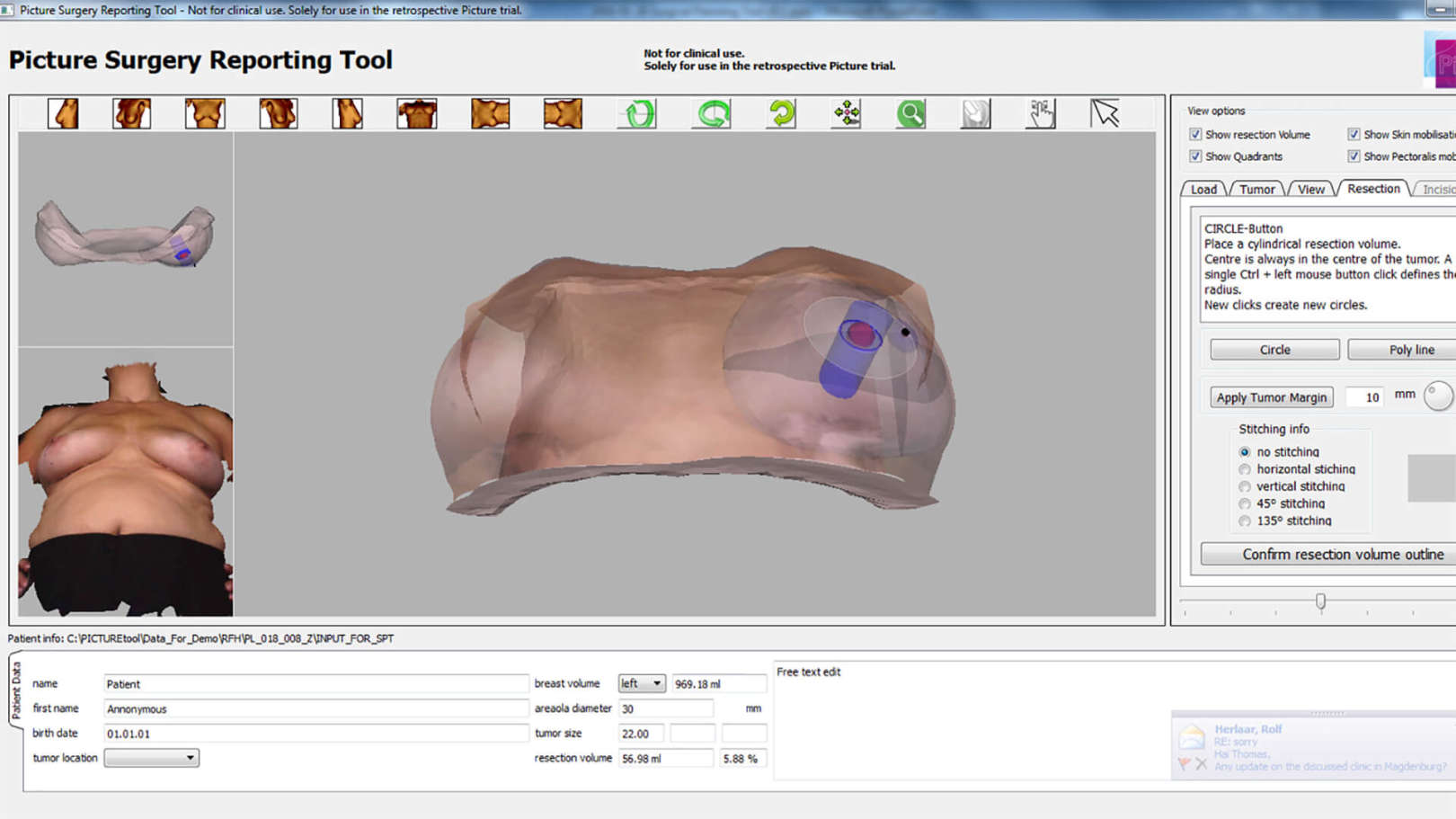

His research can be summed up in three major topics: computer vision, machine learning and decision support systems. Cardoso has co-authored 150+ papers, 50+ of which in international journals. Cardoso has been the recipient of numerous awards, including the Honorable Mention in the Exame Informática Award 2011, in software category, for project “Semantic PACS” and the First Place in the ICDAR 2013 Music Scores Competition: Staff Removal (task: staff removal with local noise), August 2013. The research results have been recognized both by the peers, with 6500+ citations to his publications and the advertisement in the mainstream media several times.